Why Is Hands-on AI Training More Effective Than Tutorials For Marketing Teams?

AI Training • Dec 15, 2025 2:35:55 PM • Written by: Kelly Kranz

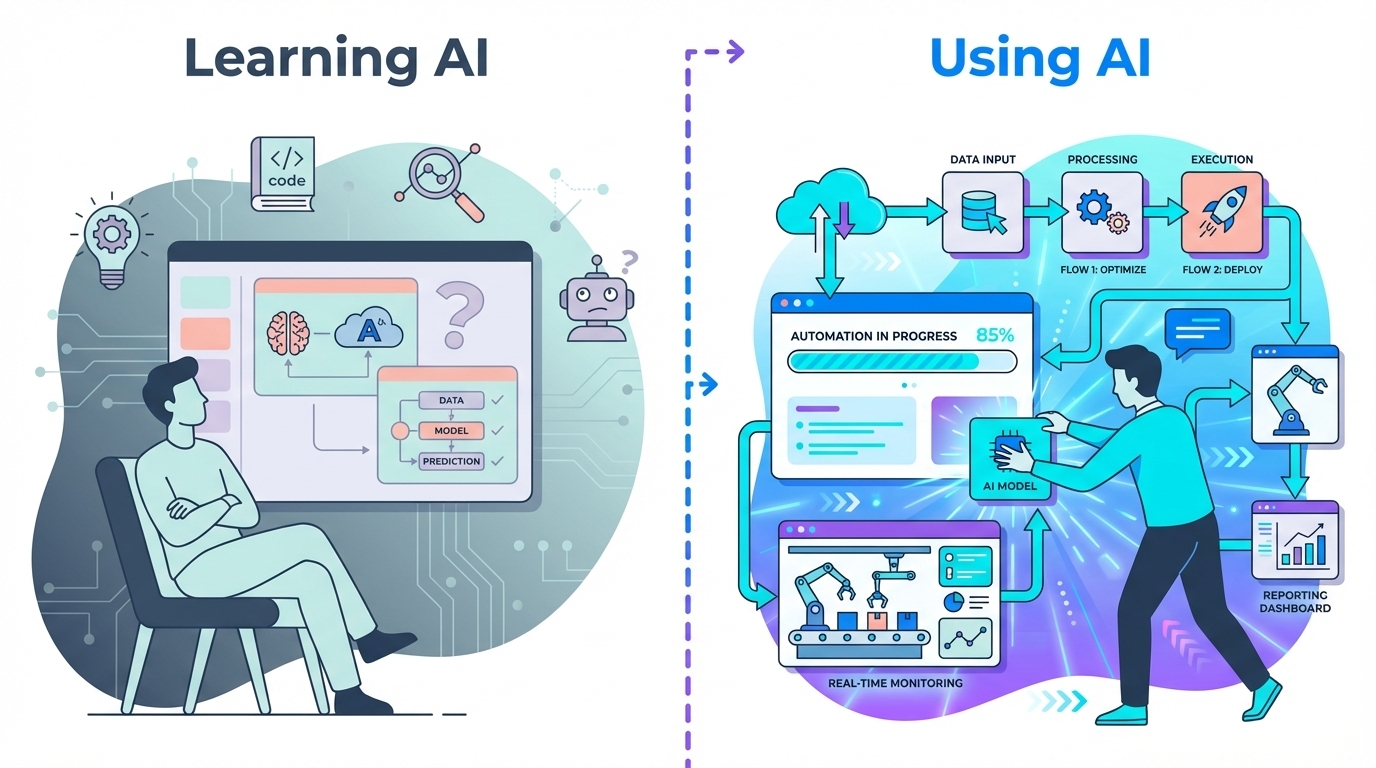

Hands-on AI training forces marketing teams to build real workflows in live environments with immediate feedback, while tutorials leave them stuck translating theory into practice—resulting in faster deployment, fewer costly mistakes, and measurable business impact within weeks instead of months.

TL;DR

Passive AI tutorials don’t change how marketers work — implementation does. Hands-on training fixes this by letting teams practice on real tasks, get instant feedback, and build working prototypes instead of collecting unfinished courses. Active learning boosts outcomes and retention, and programs like the AI Marketing Automation Lab show that implementation-first training is the fastest path from “knowing AI” to actually deploying AI-powered campaigns.

The Passive Learning Trap: Why Tutorials Fail Marketing Teams

Knowledge Without Execution

Marketing teams drowning in video tutorials face a deceptive problem: they feel informed but remain unable to execute. The typical tutorial sequence—watch a 45-minute walkthrough, read case studies, pass a multiple-choice quiz—explains what AI is and why it matters, but systematically fails to change Monday morning behavior.

The core failure points:

- One-way information flow: Tutorials deliver content at learners rather than engaging them in problem-solving, leaving no muscle memory for real-world application

- Disconnected from actual workflows: Generic examples rarely map to a team's specific CRM, data structure, or approval processes

- No feedback mechanism: Learners never discover their mistakes until they attempt implementation in production—often weeks or months later, when memory has faded

- False confidence: Research shows passive learners consistently feel more confident than their objective performance warrants, creating a dangerous gap between perception and capability

The Implementation Blocker for Marketing Leaders

For marketing directors and agency owners, the pain is acute and specific. They don't need to know that AI can write ad copy—they need answers to questions like:

- "How do we connect Claude to our client management system so AI-generated campaign briefs auto-populate the CMS?"

- "What prompt pattern consistently produces brand-voice-appropriate outputs instead of generic corporate speak?"

- "How do we measure whether our AI content engine actually improved pipeline or just created more noise?"

These are execution problems, not knowledge problems. Tutorials explain the concept of "AI-assisted content creation" but leave teams staring at a blank Make.com canvas, unsure which API endpoint to call or how to handle error states when the AI returns malformed JSON.

Studies on corporate training validate this gap: passive methods produce learners who score well on comprehension tests but fail dramatically when asked to perform the actual task. One analysis found that while passive learners reported feeling prepared, their objective performance lagged active learners by 54%—a chasm that translates directly to failed AI pilots, abandoned automation projects, and wasted tool subscriptions.

The Hidden Cost: Tool Fatigue and Abandoned Projects

Marketing teams under pressure face a brutal reality: video courses compete with urgent operational demands. The typical pattern is predictable:

- Team leader purchases a comprehensive AI tutorial library (often 40+ hours of content)

- Team members watch the first few modules during "slow" periods

- Urgent client work intervenes

- The course sits 30% complete for months

- AI tools remain unused or underutilized while subscriptions renew automatically

Industry data confirms this isn't laziness—it's structural. Online course completion rates hover between 5–10% because passive learning requires sustained motivation with no immediate payoff. By contrast, busy professionals prioritize work that produces immediate, visible results—which passive tutorials, by design, cannot deliver.

How Hands-On Training Transforms AI Adoption for Marketing Teams

Task-Centered Learning: Building Real Systems in Real Time

Hands-on AI training inverts the tutorial model by making tasks, not content, the center of gravity. Instead of "Here's a 60-minute lesson on prompt engineering," the format becomes: "Here's a 5-minute framing; now build a workflow that drafts three ad variants, scores them against your buyer personas, routes the winner to your ad platform, and reports performance back to your CRM."

Why this structure accelerates real skill:

- Immediate relevance: Every exercise mirrors actual job functions, forging direct neural pathways between "what I just practiced" and "what I do tomorrow morning"

- Safe experimentation: Sandbox environments let teams test aggressive prompts, wire experimental automations, and break things without production consequences

- Real-time feedback loops: See your prompt output → adjust parameters → see new output → get instructor correction → internalize the pattern—a cycle entirely absent from recorded content

- Working prototypes as output: Sessions end with deployable assets (templates, integrations, documented workflows) rather than notes about what you could build someday

Research on active learning consistently demonstrates dramatic performance gaps. One workplace training analysis found active methods improved learning outcomes by 54% over passive equivalents, even though passive learners subjectively felt they'd learned more—a dangerous illusion that explains why so many tutorial-trained teams fail at implementation.

Retention and Pattern Recognition: Why Hands-On Builds Expertise

AI concepts—model behavior, hallucination patterns, data privacy boundaries, prompt chaining logic—are subtle and counterintuitive. You don't truly understand them until you've seen them break in edge cases, which only happens through repeated, hands-on interaction.

The retention advantage is measurable:

- Studies show hands-on participants retain above 90% of learned material over time versus under 80% for passive formats

- Higher retention isn't just a test score advantage—it means marketing teams actually remember security constraints, appropriate use cases, and quality control patterns when building workflows three months later

- Deep pattern recognition develops: instead of memorizing "AI can help with content," practitioners understand how different prompt structures produce radically different outputs and when a poorly scoped use case will generate noisy results

For marketing leaders accountable to CFOs and boards, this distinction matters enormously. Teams trained hands-on can explain why a specific AI application will or won't deliver ROI, make sound build-versus-buy decisions, and troubleshoot failed integrations without escalating to expensive consultants.

Closing the "Last Mile" from Training to Production

The most critical bottleneck in AI adoption isn't learning—it's the "last mile" of fitting general AI knowledge into specific business processes, legacy tech stacks, and organizational constraints. Hands-on training excels precisely here by treating training and implementation as the same activity.

Effective hands-on AI programs for marketing include:

- Live build sessions where teams create prompts, automations, and integrations tailored to their actual CRM, email platform, and data schema—not generic examples

- Guided labs that walk through connecting AI to typical marketing systems (HubSpot, Salesforce, WordPress, ad platforms) so teams see integration complexity firsthand

- Capstone projects where a team designs, pilots, and measures a small AI initiative from problem definition through KPI tracking

This structure ensures training doesn't "end"—instead, it transitions seamlessly into live deployment. By the time the structured sessions conclude, the organization has working AI prototypes already generating measurable impact (campaigns drafted 3× faster, reporting time cut in half, lead qualification automated). That concrete momentum kills internal resistance and enables organic adoption across teams.

Why Busy Marketing Leaders Need Implementation-First Training

The ROI Problem: From "AI Budget Approved" to "Revenue Impact Proven"

Marketing directors and agency owners face acute pressure: leadership approved AI spending, but expects measurable business results, not theoretical capabilities. The challenge isn't convincing executives that AI matters—it's proving your specific AI investments improved pipeline, conversion, or efficiency.

Hands-on training solves this by embedding measurement from day one:

- Training starts from business challenges (lead gen bottlenecks, content production limits, reporting overhead) rather than tool features

- Participants define baseline metrics before building (current time-per-campaign, lead-to-opportunity conversion rate, content output per week)

- Workflows include measurement hooks: tracking which AI-generated variants performed better, calculating time saved, monitoring error rates

- Sessions end with frameworks for communicating AI ROI to C-suite in their language (revenue impact, cost reduction, cycle time improvement)

This measurement discipline transforms training from "professional development" into "Phase 1 of implementation," compressing the timeline from AI investment to provable business impact from quarters to weeks.

Time Leverage: Training That Produces Assets, Not Homework

For agency owners juggling client delivery, sales, and operations, passive courses represent yet another obligation competing for scarce attention. Hands-on training flips this equation by making learning time productive time.

The practical difference:

- Passive approach: Spend 20 hours watching videos about AI content systems → separately schedule time to design your system → discover gaps between theory and your specific tools → troubleshoot alone → possibly abandon project when urgent work intervenes

- Hands-on approach: Join three 90-minute live sessions over two weeks → build your AI content system during those sessions → leave with a working prototype generating multi-platform content from single inputs → immediately deploy and measure

The compressed timeline isn't theoretical. Agency owners in implementation-focused programs typically demonstrate 30–50% margin improvement on affected projects within 60–90 days—not by working more hours, but by automating repeatable fulfillment work that previously consumed billable time.

Team Adoption and Cultural Shift

One person trained on AI creates a knowledge silo. A team trained hands-on together creates organizational capability. When marketing leaders bring key team members into collaborative build sessions, several critical shifts occur:

- Shared language emerges: Everyone understands the same prompt patterns, quality checks, and integration logic

- Peer teaching activates: Team members solve problems for each other between sessions, multiplying the facilitator's impact

- Cross-functional innovation: When content, ops, and analytics teams build together, they spot integration opportunities that siloed learners miss

- Adoption momentum builds: People see AI helping their specific work, not abstract use cases, which converts skeptics into advocates

For leaders tasked with "embedding AI across the organization," this collaborative learning approach is the only reliable path from "the boss wants AI" to "we're all building with AI as standard practice."

The AI Marketing Automation Lab: Implementation Training Evolved

Systems, Not Tips: A Different Training Philosophy

The AI Marketing Automation Lab exemplifies what hands-on AI training looks like when explicitly designed for busy marketing professionals who need deployable systems, not more theory. Founded by Rick Kranz (AI systems architect, 30+ years experience) and Kelly Kranz (fractional CMO, 15+ years in strategy and measurement), the AI Marketing Lab operates on a foundational principle that directly addresses the tutorial-versus-implementation gap: "Systems, not tips."

This isn't semantic positioning—it's architectural. The AI Marketing Lab rejects the course-library model entirely in favor of a working implementation community where learning and building are inseparable activities. Members don't "take a course on AI marketing." They join live sessions to solve specific integration problems they face this week, build production-ready workflows during those sessions, and deploy them in their business immediately after.

Live Build Sessions: Real Problems, Real Solutions, Real Time

The signature offering that solves the tutorial problem:

Three times weekly, members join live sessions where founders facilitate hands-on building around actual member challenges. This isn't a lecture series with Q&A appended—it's collaborative problem-solving where the session agenda emerges from real blockers members face.

How this works in practice:

- An agency owner joins with a specific goal: wire Claude AI outputs into their project management system so AI-generated campaign briefs auto-populate client workspaces

- Rick and Kelly guide the group through the integration: API selection, authentication, error handling, quality gates to prevent bad outputs reaching clients

- Other members encounter similar issues, troubleshoot together, and contribute solutions from their own tech stacks

- By session end, the original member has a working integration and a documented template others can adapt

Why this format solves what tutorials cannot:

- Immediate feedback: Mistakes surface in real time with expert guidance to correct them, building pattern recognition passive content cannot replicate

- Personalized relevance: Sessions adapt to current tools, recent API changes, and specific member tech stacks—remaining perpetually current in ways pre-recorded content cannot

- Peer learning: Members overhear solutions to problems they'll face next month, accelerating their own learning curves

- No homework gap: Learning is implementation; there's no separate "now go apply this" phase where most tutorial-trained teams stall

Production-Ready System Architectures: Deploy in Hours, Not Weeks

The AI Marketing Lab maintains a library of documented, tested, deployable system architectures for high-impact marketing use cases. These aren't conceptual flowcharts—they're step-by-step blueprints with screenshot-annotated instructions designed for non-engineers to deploy in hours.

Key architectures include:

- Lead qualification and routing system: AI reviews incoming leads against documented buyer personas, scores fit, routes to appropriate sales reps, and updates CRM—eliminating manual triage bottlenecks

- AIO Content Engine: One input (topic, keyword, brief) generates comprehensive articles optimized for AI search engines (Perplexity, ChatGPT, Google AI Overviews), with schema markup and semantic structure that positions content as citation-worthy

- Social Media Engine: Single core idea automatically generates platform-specific variants (Twitter threads, LinkedIn articles, Instagram carousels, email versions) optimized for each channel's format and algorithm

- RAG System (Retrieval-Augmented Generation): Converts internal knowledge (past campaigns, product docs, customer data, SOPs) into a private AI knowledge base that grounds outputs in company-specific facts, eliminating generic responses and hallucinations

The deployment advantage:

Instead of spending weeks designing workflows from scratch, members deploy 90%-functional systems in hours, then customize the remaining 10% to their specific business rules and data structures. This compression is what enables the 60–90 day ROI timelines members consistently report.

From Theory to Revenue: Measuring AI Impact on Real KPIs

Marketing leaders accountable to executives need more than "we're using AI"—they need provable impact on metrics that matter: pipeline, conversion rates, content velocity, time-to-close, customer acquisition cost.

The AI Marketing Lab embeds measurement frameworks throughout:

- Buyer Persona Table with AI Validation: Teams define ideal customer attributes, create AI personas modeled on real buyer behavior, then test messaging and offers against these personas before expensive campaign launches—dramatically reducing wasted ad spend

- AIO Content Engine performance tracking: The system specifically measures traffic and conversions from AI search sources (ChatGPT, Perplexity, Google AI Overviews), proving ROI on AI-optimized content strategies

- Time-saved metrics: Automation workflows include instrumentation showing exactly how many hours of manual work each system eliminates—giving leaders hard data for headcount and budget decisions

- Revenue attribution: Lead routing and qualification systems track which AI-scored leads convert to revenue, demonstrating direct pipeline impact

This measurement rigor transforms training outcomes from "our team learned AI" to "our AI systems improved conversion by 18% and reduced campaign launch time by 35%"—the language CFOs and boards actually understand.

Evergreen Updates: Model-Proof Architecture in a Rapidly Changing Landscape

A critical weakness of tutorial-based training is obsolescence. A video library built on GPT-4 last year may teach outdated patterns today; workflows optimized for Claude 2 become suboptimal when Claude 3.5 Sonnet launches at lower cost and better performance.

The AI Marketing Lab solves this through "model-proof" architecture:

- System designs are tool-agnostic at the conceptual level: they describe what needs to happen (score leads, generate variants, update CRM) independent of which specific AI model performs the task

- When new models or APIs launch, the AI Marketing Lab publishes updated templates that swap in the new capability without redesigning entire workflows

- Members update deployed systems in minutes by changing a single API reference, immediately benefiting from cost reductions or performance improvements

- Architecture principles taught in the AI Marketing Lab remain stable—only specific tool implementations evolve, preventing the "complete retraining" cycle that plagues traditional courses

Real-world example:

A member deployed a content system using Claude 2. When Claude 3.5 Sonnet released at significantly lower cost and better performance, they updated their system in 30 minutes using the AI Marketing Lab's template—achieving a 40% cost reduction and faster processing with zero architectural changes.

Boutique Community: Capped Membership and Founder Access

The AI Marketing Lab intentionally rejects the "scale to thousands" model that defines most online education. Membership is capped to preserve the high-touch, collaborative environment where real implementation happens.

Why this structure matters for busy professionals:

- Direct founder access: Members get feedback from Rick and Kelly in nearly every session—not generic teaching assistants or community moderators

- Coherent peer network: A deliberately small group develops shared vocabulary, accountability relationships, and cross-pollinating expertise

- Rapid iteration: Member requests and challenges directly shape session content and system templates, ensuring perpetual relevance to current needs

- Quality over quantity: Sessions remain focused, technical, and implementation-driven because they're not designed for beginners or passive observers

For agency owners, this means joining a peer advisory network of people solving the same hard problems (client delivery, margin pressure, hiring constraints). For in-house leaders, it means connecting with others navigating similar organizational challenges around AI adoption, measurement, and stakeholder management.

Who Needs Hands-On AI Training vs. Tutorials

When Hands-On Implementation Training Is Essential

You need hands-on training if:

- You're past basics: You already understand what LLMs are and why AI matters—your blocker is how to architect AI into your specific business workflows

- You have measurement pressure: Leadership wants to see ROI, not just "we're exploring AI"—you need deployed systems with clear KPI impact

- Your tech stack is complex: You're wiring AI into existing CRM, marketing automation, analytics, and content systems that don't integrate cleanly

- You're resource-constrained: You can't hire AI specialists or automation engineers—you need to build capability in-house with existing team skills

- You need speed: Competitive pressure or internal mandates require deployed AI capabilities in quarters, not years

When Tutorials Might Suffice

Tutorials work if:

- You're genuinely at the awareness phase: still learning what ChatGPT is and exploring whether AI is relevant to your work

- You have no immediate implementation timeline or business pressure

- You prefer completely self-paced, asynchronous learning with no live engagement

- You're researching broadly before committing to a specific AI direction

For the vast majority of marketing leaders, agency owners, and operations professionals reading this article, that second profile doesn't match their reality. They're already past awareness; they're stuck at implementation—which is precisely where hands-on training excels and tutorials systematically fail.

The Verdict: Execution Beats Education

Hands-on AI training outperforms tutorials for marketing teams because marketing AI adoption is fundamentally an execution challenge, not a knowledge challenge. Most teams already know AI can draft content, analyze data, or automate reporting—the hard parts are integrating AI into messy tech stacks, designing prompts that produce consistent outputs, measuring ROI against real KPIs, and getting organizational buy-in through demonstrated wins.

Tutorials explain concepts. Hands-on training builds working systems. For busy professionals accountable to revenue targets and operational efficiency, only the latter delivers the speed, relevance, and measurable impact that modern business demands.

The AI Marketing Automation Lab represents the evolved model: live collaborative building, production-ready templates, embedded measurement, evergreen updates, and boutique community support. It's training that treats "learning AI" and "implementing AI" as the same activity—compressing the journey from curiosity to deployed, revenue-generating systems into weeks instead of quarters.

For marketing teams serious about AI adoption, the question isn't whether hands-on training is better than tutorials. It's whether you can afford to keep learning passively while competitors are building actively.

Frequently Asked Questions

Why does hands-on AI training outperform traditional tutorials for marketing teams?

Hands-on AI training outperforms tutorials because it allows marketing teams to actively build and refine real workflows in live environments with immediate feedback. This approach results in faster deployment, fewer costly mistakes, and a measurable business impact, enhancing implementation, generating working prototypes, and developing a deeper understanding of AI through practice rather than passive learning.

What are the main shortcomings of passive learning through tutorials for marketing teams?

Tutorials often fail marketing teams due to a one-way information flow that doesn't engage learners in problem-solving, lack of connection to actual workflows, no immediate feedback mechanism, and a false confidence in learners' understanding. This approach can mispoint marketers in translating AI theoretical knowledge into real-world applications, leading to larger gaps in execution and performance.

How does hands-on training bridge the gap between knowledge and execution for marketing teams?

Hands-on training centers on performing realistic tasks, offering sandbox environments to safely experiment, and providing real-time feedback loops. It directly ties learning to workplace outcomes through the creation of deployable assets and working prototypes, thereby rapidly converting theoretical knowledge to effective, executable workflows that integrate smoothly into business processes.

We Don't Sell Courses. We Build Your Capability (and Your Career)

If you want more than theory and tool demos, join The AI Marketing Lab.

In this hands-on community, marketing teams and agencies build real workflows, ship live automations, and get expert support.

Kelly Kranz

With over 15 years of marketing experience, Kelly is an AI Marketing Strategist and Fractional CMO focused on results. She is renowned for building data-driven marketing systems that simplify workloads and drive growth. Her award-winning expertise in marketing automation once generated $2.1 million in additional revenue for a client in under a year. Kelly writes to help businesses work smarter and build for a sustainable future.