What Are the Best AI Training Frameworks for Digital Agencies?

AI Training • Dec 15, 2025 2:51:28 PM • Written by: Kelly Kranz

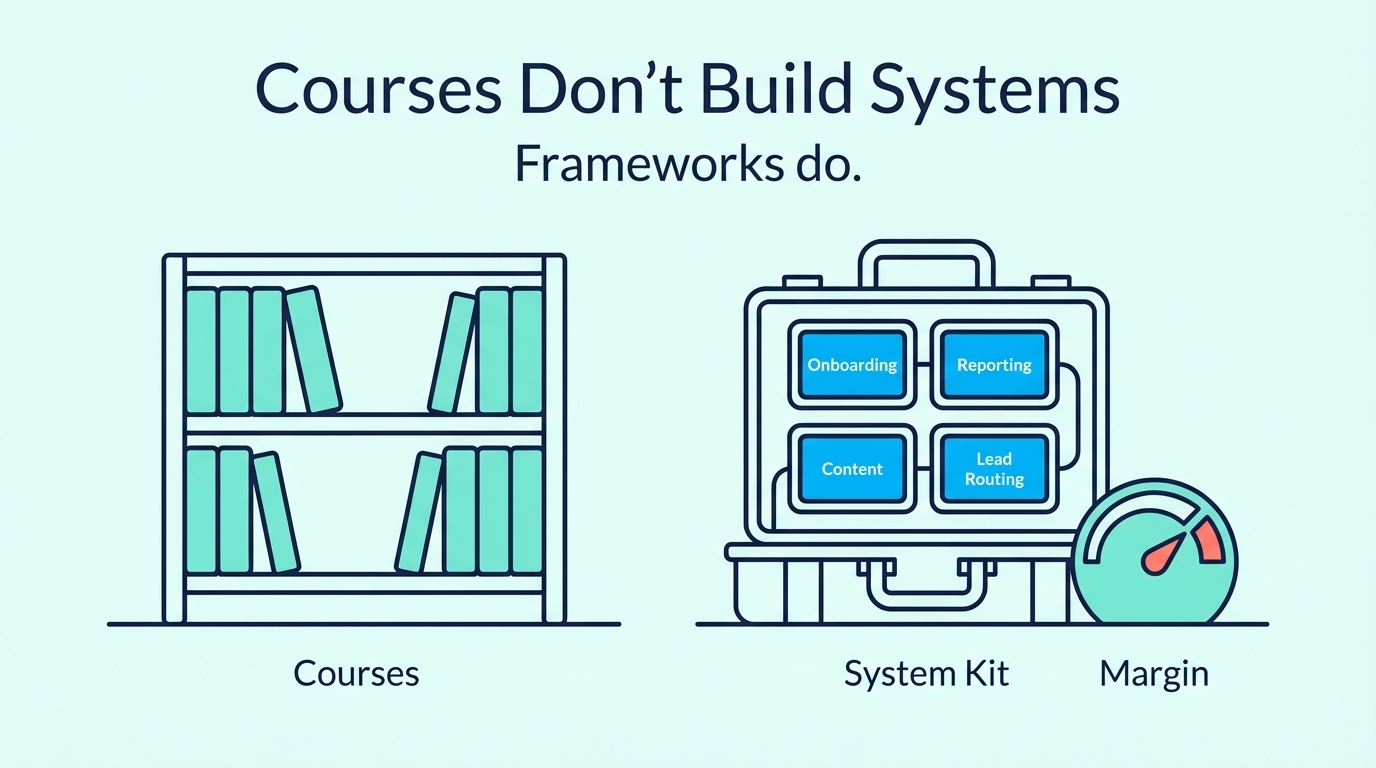

The best AI training frameworks for digital agencies are modular, project-based systems that integrate hands-on building with measurable business outcomes, real client scenarios, and production-ready architectures—not passive video libraries.

TL;DR

-

Digital agencies don’t need more theory—they need AI training that improves margins and creates competitive differentiation.

-

Passive video courses boost confidence but don’t translate into implementation or deployable systems.

-

Hands-on, done-with-you frameworks compress learning + execution so teams build while they learn.

-

The article explains why traditional training fails busy agency owners and how the AI Marketing Automation Lab fixes this with live implementation, production-ready architectures, peer support, and constant updates

Why Standard AI Training Fails Digital Agencies

The Implementation Gap: Knowledge Without Systems

Most digital agency teams have already watched webinars, read case studies, and enrolled in AI courses. They understand that large language models can write copy, analyze data, and automate workflows. Yet when they attempt to integrate AI into client deliverables—connecting models to CRMs, building reliable content pipelines, or architecting multi-step automations—they hit a wall.

The problem is not awareness; it's execution.

Traditional passive learning formats—pre-recorded video courses, lecture-style webinars, and read-then-quiz modules—teach the "what" and "why" of AI, but systematically fail to address the "how" of real-world implementation. Research on corporate training confirms this gap: passive methods often leave learners feeling confident, but objective performance scores are significantly lower than for active, hands-on approaches. One comprehensive analysis found that active learning improved outcomes by approximately 54%, even though passive learners reported feeling like they'd learned more.

For agencies operating under tight margins and client deadlines, this confidence-competence gap is expensive. Teams think they're ready to deploy AI after completing a video playlist, but when confronted with messy client data, conflicting tool requirements, and production-level quality standards, the skills simply don't transfer.

The Busy Owner's Dilemma: Learning as Another Task

Agency owners face a specific constraint that standard training frameworks ignore: they cannot afford to treat learning as separate from delivery work.

A typical online AI course demands 10–20 hours of passive consumption spread across weeks, with "homework" exercises that use generic examples rather than real client workflows. For an owner juggling sales calls, client relationships, team management, and firefighting, this model guarantees one outcome: the course sits 30% complete in their browser tabs while urgent work takes priority.

The broader data supports this pattern. Video-based courses typically see 5–10% completion rates, reflecting the disconnection between passive format and pressing business needs. Meanwhile, agency owners need to simultaneously:

- Learn how AI can improve fulfillment efficiency

- Decide which AI investments justify budget allocation

- Implement working systems that serve real clients

- Measure ROI to justify expansion

Passive courses address only the first item. The other three—decision, implementation, and measurement—require applied problem-solving that cannot be learned by watching someone else work through sanitized examples.

The Frankenstack Problem: Generic Training, Specific Infrastructure

Every agency has accumulated a unique technology stack over years: a CRM (HubSpot, Salesforce, Pipedrive), project management tools (Asana, ClickUp, Monday), communication platforms (Slack, Teams), marketing automation (ActiveCampaign, Mailchimp, Klaviyo), and content systems (WordPress, Webflow, Notion). These tools often don't integrate cleanly.

Generic AI training teaches principles using the instructor's preferred stack—usually a simple demo environment with minimal real-world constraints. When an agency owner tries to apply those lessons to their own Frankensteined infrastructure, they encounter:

- API authentication challenges not covered in the course

- Data format mismatches between systems

- Permission and security constraints their IT or clients impose

- Tool-specific limitations the course never mentioned

Each blocker requires research, troubleshooting, and experimentation. Without real-time guidance, these obstacles often halt implementation entirely. The agency owner is left with theoretical knowledge but no functioning system—and no time to bridge the gap alone.

Lack of Strategic Context: Tools Without Business Models

Many AI courses teach tools and techniques in isolation: "Here's how to use ChatGPT for content creation," or "Here's a Make.com workflow template." While these lessons transfer technical skills, they rarely answer the business questions agency owners actually face:

- Which client workflows generate the highest ROI when augmented with AI?

- How do we price AI-powered services competitively while protecting margin?

- What quality assurance processes prevent AI outputs from damaging client trust?

- How do we position AI services to differentiate from competitors who claim to 'use ChatGPT'?

Without strategic frameworks that connect technical capabilities to agency business models, owners are left guessing about prioritization. They may automate low-value tasks while neglecting high-impact opportunities, or launch AI services without the operational foundation to deliver consistently.

This strategic void is why many agencies experiment with AI but fail to convert those experiments into revenue-generating service lines.

The Case for Hands-On, Project-Based AI Frameworks

Active Learning Architecture: Building While Learning

Effective AI training for agencies must invert the traditional model. Instead of "consume content, then try to apply it later," the framework should be: "here's a business problem; let's architect an AI solution together, right now."

This shift centers learning on tasks, not content. Rather than a 45-minute lesson on generative AI followed by a quiz, participants receive a brief framing and then immediately build: "Design an AI workflow that generates three ad variants, pushes them to your client's ad platform, and reports performance metrics back to your dashboard."

Several structural elements make this approach dramatically more effective for busy professionals:

Real Client Workflows as Learning Vehicles

High-impact AI training for agencies uses realistic scenarios that mirror actual deliverables:

- Building AI-assisted client onboarding sequences

- Architecting automated reporting systems that pull from multiple data sources

- Designing content engines that maintain client brand voice at scale

- Creating lead qualification workflows specific to client industries

When training tasks are indistinguishable from billable work, three outcomes occur simultaneously:

- Engagement remains high because the work directly serves immediate business needs

- Learning transfer is immediate because there's no "translation step" between training and application

- ROI is measurable because the training outputs are production-ready assets

This relevance is not just motivational—it's structural. The mental link between "what I just learned" and "what I will deploy Monday morning" creates neural pathways that passive observation cannot forge.

Safe Sandbox Environments for Experimentation

Production systems demand reliability. Clients cannot tolerate broken automations, hallucinated content, or data leaks. Yet mastery of AI requires experimentation, aggressive testing, and intentional failure.

Strong hands-on frameworks resolve this tension through sandbox environments—isolated labs where participants can:

- Test prompts with extreme inputs to find edge cases

- Wire together complex automations without risking client data

- Explore model behaviors (including failures) to develop accurate mental models

- Practice recovery procedures when AI systems produce bad outputs

This safety creates psychological permission to fail, which is essential for developing robust AI literacy. Participants learn not just what AI can do, but where it breaks—and how to design guardrails accordingly.

Immediate Feedback Loops: Attempt → Result → Adjustment

The defining feature of effective skill acquisition is rapid feedback: you try something, see the result, receive expert guidance, and adjust. This "attempt → result → feedback → adjustment" cycle is almost entirely absent from passive training, where the only feedback is a multiple-choice score delivered after consumption ends.

In live, hands-on AI training:

- Participants build a prompt or workflow

- The system executes and produces output (often flawed)

- An expert facilitator identifies the failure mode and explains why it occurred

- Participants modify their approach and immediately test again

This cycle repeats dozens of times in a single session, creating pattern recognition that passive watching cannot replicate. Studies in workplace training consistently show that active formats with real-time feedback improve retention rates above 90%, compared to under 80% for passive equivalents.

For agencies, higher retention is not academic—it means team members reliably remember data security constraints, quality thresholds, and brand guidelines when building client-facing AI systems.

Why Agencies Need Modular, Production-Ready Architectures

The "Last Mile" Problem: From Concept to Deployed System

Even when agency teams understand AI capabilities, they often stall at the "last mile"—taking general knowledge and translating it into specific, deployed systems that serve real clients under production constraints.

This last mile includes:

- Integration work: Connecting AI models to existing CRMs, project management tools, and client platforms

- Quality assurance: Implementing review workflows so bad AI outputs never reach clients

- Performance measurement: Instrumenting systems to track time saved, quality metrics, and client satisfaction

- Documentation and handoff: Creating SOPs so the system can be maintained by team members who didn't build it

Traditional courses treat these steps as "extra" or "follow-up work." In reality, they are the implementation. A framework that does not address them leaves agencies with proof-of-concepts that never reach production.

Modular System Blueprints: 90% Built, 10% Customized

The most effective AI training frameworks for agencies provide production-ready architectural blueprints—documented, tested system designs that cover common agency use cases:

- AI-powered content production pipelines: One input (brief or keyword) generates blog posts, email copy, social media variants, and ad creative, all formatted and ready for client review

- Automated client reporting systems: AI pulls data from analytics platforms, CRMs, and ad accounts; synthesizes performance summaries; and drafts insights

- Lead qualification and routing workflows: AI scores inbound leads against client criteria and routes high-value prospects to sales teams automatically

- Customer support triage systems: AI categorizes incoming requests, drafts responses for human review, and escalates urgent issues

Each blueprint includes:

- Step-by-step implementation guides with annotated screenshots so non-engineers can deploy

- Tool-agnostic logic that works across platforms (Make.com, Zapier, native integrations)

- Customization patterns explaining how to adapt the system to specific tech stacks and business rules

This modular approach allows agencies to deploy a 90% functional system in hours, then spend remaining time on the 10% of customization that reflects their unique client needs and brand. The alternative—building from scratch—consumes weeks and often stalls indefinitely.

Model-Proof Design: Future-Resistant Infrastructure

AI capabilities and pricing evolve rapidly. A workflow optimized for GPT-4 in 2023 may be slower and more expensive than one using Claude 3.5 Sonnet in 2025. A system hardcoded to a specific API may break when the provider deprecates that endpoint.

Agencies cannot afford to rebuild AI infrastructure every six months. Effective frameworks teach architecture principles that transcend specific models, then provide "model swap" templates when new capabilities launch.

For example:

- A content generation system is designed with abstract steps: "Generate outline → Draft body → Optimize for platform → Review"

- Initially implemented using GPT-4 Turbo

- When Claude 3.5 Sonnet launches with better performance at lower cost, the framework provides an updated template

- The agency updates model references in 30 minutes, achieving 40% cost reduction without redesigning the workflow

This "model-proof" approach prevents technical debt accumulation and ensures that training investment compounds over time rather than depreciating.

Introducing the AI Marketing Automation Lab: A Framework Built for Agency Reality

Not a Course—An Implementation Community

The AI Marketing Automation Lab represents a structural departure from traditional training. It is not a content library or video playlist. It is a private implementation community where agency owners, in-house leaders, and system architects build production AI systems collaboratively.

Founded by Rick Kranz (AI systems architect, 30+ years) and Kelly Kranz (fractional CMO, 15+ years in strategy and measurement), the AI Marketing Lab operates on a singular principle: "Systems, not tips."

The distinction is critical:

- Tips are isolated techniques: "Use this prompt template" or "Try this tool"

- Systems are integrated architectures: end-to-end workflows that take inputs (client briefs, data, requests) and produce reliable outputs (content, reports, qualified leads) without manual intervention

Tips age quickly and stack poorly. Systems compound and create leverage.

Live Build Sessions: Collaborative Problem-Solving 3x Weekly

The core offering is live, facilitated build sessions three times per week where members bring real problems and co-architect solutions in real time.

These are not lectures. A typical session structure:

- Problem framing (10 min): A member presents a specific challenge—"I need to automate monthly client reporting across three data sources" or "How do I maintain brand voice when AI generates social content?"

- Collaborative design (30 min): Rick and Kelly guide the group through system architecture: which tools connect where, how data flows, what quality checks are needed, where humans review outputs

- Live building (40 min): Participants actively construct the workflow—configuring API connections, writing prompts, setting up automation logic—while troubleshooting errors together

- Testing and iteration (20 min): The group tests the system with real data, identifies failure modes, and refines

This format creates several compounding advantages:

- Real-time feedback: Participants see mistakes and solutions immediately, accelerating pattern recognition

- Peer learning: An ops manager's insight helps an agency owner; a SaaS founder's approach inspires a marketing director

- Personalized guidance: Rick and Kelly adjust the session dynamically based on the specific problems in the room, ensuring relevance to current challenges

- No homework: Learning happens during work time, not as an additional to-do item

Production-Ready System Architectures: Deploy, Don't Design From Scratch

The AI Marketing Lab maintains a curated library of system snapshots—fully documented, tested workflows for high-impact agency use cases.

The AIO Content Engine (AI-Optimized Search)

Modern search engines increasingly surface AI-generated answers rather than link lists. Google's Generative AI Overviews, Perplexity, and ChatGPT web search reward detailed, schema-marked-up, semantically rich content.

The AIO Content Engine is a system that:

- Takes a single input idea or keyword

- Generates a comprehensive, detailed article optimized for both human readers and AI model context windows

- Adds schema markup so search engines understand content structure

- Produces supporting images

- Publishes and syndicates across channels

Why this matters for agencies:

- New service line: Agencies can offer "AI search optimization" as a premium service distinct from traditional SEO

- Content velocity: One input produces a month's worth of content assets without hiring additional writers

- Measurable ROI: The system tracks traffic from AI search sources specifically, proving value to clients

The Social Media Engine: One Idea, Multiple Platforms

Content production bottlenecks are agency killers. A founder has one great insight but must manually adapt it for Twitter, LinkedIn, Instagram, email, and client blogs—or let the idea die.

The Social Media Engine automates multi-platform adaptation:

- Input one core idea (concept, case study, announcement)

- The system generates platform-specific variants:

- Twitter: Threaded takeaways in voice-optimized style

- LinkedIn: Professional narrative with business context

- Instagram: Visual-first carousel design

- Email: Longer-form subscriber version

- Each variant is optimized for that platform's algorithm and audience expectations

- Outputs route to a review dashboard for 5-minute approval

- Approved content auto-posts on schedule

Agency impact:

- Margin improvement: 80% of production work automated, freeing creators for strategy and client relationships

- Client upsell: Packaged as "multi-channel content amplification" service

- Consistency: Core brand messages broadcast uniformly across platforms

The RAG System (Retrieval-Augmented Generation): Private Knowledge Base

Most agencies have valuable but scattered institutional knowledge: past campaign briefs, client performance data, internal playbooks, proven messaging frameworks. This data lives across Google Drives, wikis, email threads, and people's heads.

A RAG system transforms this scattered knowledge into an AI-accessible, private knowledge base:

- Upload internal documents (campaign archives, client data, process SOPs)

- Documents are indexed and embedded into a vector database

- When team members query the AI assistant, it searches this private knowledge base first

- The AI augments responses with current, contextual, proprietary information

- Outputs are grounded in actual agency data, not generic web information

Practical example:

A junior account manager is preparing a pitch for a retail client. Instead of hunting through past work, they ask the AI copilot: "What strategies worked best for our previous retail clients?" The system searches internal archives, surfaces three relevant case studies with performance metrics, and drafts a customized pitch outline. The manager refines and sends—total time, 15 minutes instead of three hours.

Why this creates agency advantage:

- Institutional memory: New hires and team members access best practices instantly

- Reduced hallucination: AI responses are grounded in proven agency work, not invented examples

- Client confidence: Pitches and strategies reference real, documented success patterns

Buyer Persona Systems: AI-Validated Targeting

Most agency client personas are stale, generic, or based on assumptions rather than testing. The AI Marketing Lab teaches a system-based approach to persona creation and validation:

- Define key attributes of ideal buyers (role, industry, budget, goals, pain points, buying process)

- Create "AI personas"—LLM-powered simulations of these buyers

- Run experiments: test messaging variants, offers, and positioning against AI personas

- AI personas role-play the buyer, surface objections, and score effectiveness

- Feedback synthesizes back into personas and campaign strategy

Real-world application:

An agency pitching a SaaS client is unsure whether messaging emphasizes "speed" or "security." Instead of guessing, they load the client's buyer persona into the AI system and test both angles. The system reveals that the buyer persona responds 40% more positively to security-first messaging and explains why (compliance concerns in the industry). The agency adjusts the campaign before launch, improving conversion rate by 25%.

Why this matters:

- Strategy validation: Messaging and positioning tested before expensive campaign deployment

- Faster iteration: Testing cycles compress from months to days

- Reduced waste: Marketing spend allocated to proven approaches, not guesswork

Boutique Community: Capped Membership, Direct Founder Access

The AI Marketing Lab intentionally limits membership to maintain session quality and ensure direct access to Rick and Kelly. It is not a mass-market course platform; it is a high-touch, high-leverage implementation community.

This structure creates:

- Accountability: A capped group develops shared context, peer teaching, and mutual support

- Relevance: Feedback and member needs shape session topics and system development

- Depth: Conversations explore nuanced implementation challenges, not just surface-level tips

Members are not lost in a marketplace of thousands—they are known participants in a working peer group.

Evergreen Updates: Model-Proof, Future-Resistant Systems

AI capabilities evolve rapidly. GPT-4's strengths differ from Claude 3.5 Sonnet's; pricing structures shift quarterly; new APIs launch constantly. Training materials that hardcode specific tools or models become obsolete within months.

The AI Marketing Lab's architectures are designed model-agnostic—they work regardless of which LLM is under the hood. When new models or capabilities launch, members receive updated templates that swap in improved performance or reduced costs without requiring full system redesigns.

Example:

A member deployed a content generation system using GPT-4 Turbo in early 2024 at $0.01 per 1K tokens. When Claude 3.5 Sonnet launched at $0.003 per 1K tokens with faster processing, the AI Marketing Lab published an updated template. The member updated their system in 30 minutes, achieving 70% cost reduction and 40% faster output. No architecture change required—just model reference updates.

This evergreen approach ensures that training investment compounds rather than depreciates.

Measurable Outcomes: What Agencies Achieve in the AI Marketing Lab

Revenue Growth Through New Service Lines

Agencies that join the AI Marketing Lab typically launch AI-powered service offerings within 60–90 days:

- "AI-Optimized Content Systems" for clients needing to scale content production without headcount

- "Marketing Intelligence Dashboards" that synthesize multi-source data into executive summaries

- "Lead Qualification Automation" that scores and routes prospects using client-specific criteria

- "Brand Voice AI Assistants" that maintain consistency across high-volume content needs

These services command premium pricing because they deliver measurable efficiency gains while maintaining quality standards.

Margin Improvement Through Fulfillment Automation

AI systems reduce the labor cost of recurring deliverables:

- Client reporting that previously consumed 8 hours monthly now takes 90 minutes (human reviews AI-generated summaries)

- Social media content production that required 6 hours per client per week now takes 90 minutes (AI generates variants, human approves)

- Lead qualification that required manual research and scoring now happens automatically before leads reach sales

Agencies typically see 30–50% margin improvement on affected service lines, with time savings redirected toward strategy, client relationships, and growth initiatives.

Competitive Differentiation: "AI-Integrated" vs. "AI-Aware"

Many agencies claim to "use AI" because team members have ChatGPT Plus subscriptions. This positioning is weak because it's undifferentiated—everyone can make the same claim.

Agencies that deploy production systems from the AI Marketing Lab differentiate by offering integrated, measurable AI solutions:

- "We've built a proprietary content engine that maintains your brand voice while scaling production 5x"

- "Our lead qualification system uses AI trained on your past wins to surface high-value prospects automatically"

- "We provide AI-powered competitive intelligence dashboards that synthesize 50+ data sources weekly"

These claims are backed by working systems, case studies, and measurable client outcomes—creating defensible competitive advantage.

Time Recovery for Founders and Leaders

Agency owners report recovering 10–15 hours per week through smart automation of low-leverage tasks:

- Email triage and response drafting

- Meeting summary and action item extraction

- Internal reporting and status updates

- Research and competitive analysis

This recovered time redirects toward high-leverage activities: strategic planning, business development, key client relationships, and team development.

Why the AI Marketing Lab Framework Succeeds Where Courses Fail

It Treats AI Adoption as a Change Management Challenge, Not a Knowledge Gap

Most agency owners and leaders already understand that AI can improve efficiency and enable new services. The blocker is not awareness—it's implementation, integration, and organizational adoption.

The AI Marketing Lab's framework addresses the actual problem:

- Implementation: Live build sessions produce working systems, not just concepts

- Integration: Production-ready architectures handle the messy reality of existing tech stacks

- Adoption: Community accountability and peer examples create momentum and reduce resistance

Traditional courses treat AI as a knowledge transfer problem and offer video content as the solution. The AI Marketing Lab recognizes that knowledge without implementation infrastructure produces nothing.

It Compresses "Learn → Decide → Build → Measure" Into One Experience

Agency owners cannot afford to separate learning from execution. The AI Marketing Lab collapses the timeline:

- Learn: During live sessions while building real systems

- Decide: With expert guidance on which use cases generate ROI

- Build: Collaboratively, using templates that work on day one

- Measure: With built-in instrumentation frameworks

This compression is why agencies see measurable outcomes within 60–90 days rather than perpetual "someday" planning.

It Provides Strategic Context, Not Just Tools

Many AI courses teach "how to use ChatGPT" or "Make.com basics" without connecting tools to business strategy. The AI Marketing Lab answers the questions agency owners actually ask:

- Which client workflows justify AI investment?

- How do we price AI-powered services to protect margin?

- What quality assurance prevents AI from damaging client trust?

- How do we position AI services competitively?

Rick and Kelly bring 45+ combined years of systems architecture, marketing strategy, and fractional CMO experience—context that translates technical capabilities into business models.

It Builds Peer Networks That Extend Beyond Training

Members don't just access founders—they connect with peers solving parallel problems:

- An ops manager shares a workflow optimization that helps an agency owner

- A SaaS founder's measurement framework inspires a marketing director

- An automation specialist's troubleshooting shortcut saves hours for multiple members

Over time, the community becomes a peer advisory network where strategic conversations and technical problem-solving happen organically.

The Verdict: Frameworks That Ship Systems Win

For digital agencies evaluating AI training investments, the decision criterion is simple: Does this framework help us deploy production systems that generate measurable business outcomes, or does it add to the pile of half-finished courses?

The best AI training frameworks for agencies are characterized by:

- Hands-on, project-based learning using real client workflows, not generic examples

- Production-ready system architectures that deploy in hours, not weeks

- Live collaboration and real-time feedback from experts and peers

- Modular, model-agnostic design that resists obsolescence as AI evolves

- Strategic business context that connects tools to revenue, margin, and competitive positioning

- Measurement frameworks that prove ROI to clients and leadership

The AI Marketing Automation Lab embodies this approach. It is not the only implementation-focused AI training option. Still, it is purpose-built for the specific pressures agency owners, in-house leaders, and system architects face: tight margins, demanding clients, limited time, and the need to prove value fast.

Agencies that adopt hands-on, system-centric frameworks move from "we're exploring AI" to "we ship AI-powered services that clients pay premium prices for" in quarters, not years. Those that remain in passive video consumption cycles stay perpetually behind—knowledgeable but not capable, aware but not differentiated.

In a market where AI literacy is table stakes, agencies win by building systems, not collecting tips. The frameworks that enable that shift are the ones worth investing in.

Frequently Asked Questions

Why do traditional AI training frameworks fail digital agencies?

Traditional AI training frameworks fail digital agencies primarily due to a gap in execution. These frameworks often focus on theoretical knowledge and passive learning methods like video courses, which do not effectively teach the practical implementation of AI in real client scenarios. This leads to a confidence-competence gap, where agencies feel prepared but actually lack the practical skills needed for implementation.

What is the AI Marketing Automation Lab?

The AI Marketing Automation Lab is a live implementation community designed specifically for digital agencies that need to deploy AI-powered services quickly. It offers a hands-on, project-based learning environment where members collaborate to build real AI systems. Founded by Rick Kranz and Kelly Kranz, the lab provides production-ready system architectures, peer collaboration, and evergreen updates to help agencies deliver AI-powered services effectively.

How do hands-on, project-based AI training frameworks benefit agencies?

Hands-on, project-based AI training frameworks benefit agencies by providing a practical learning experience that focuses on building real client workflows. This approach not only maintains high engagement levels but also ensures immediate learning transfer and measurable ROI, as the tasks agencies work on during training are directly applicable to their business needs. Moreover, such frameworks offer robust AI literacy through iterative learning, real-time feedback, and direct application.

What makes the AI Marketing Automation Lab unique?

The AI Marketing Automation Lab is unique because it is not just a course but a collaborative implementation community that focuses on real-world, practical applications of AI for digital agencies. It provides a structured environment for live, facilitated build sessions where participants can bring real problems and co-develop AI solutions in real-time. This approach ensures that learning is immediately applicable and directly relevant to the participants' specific business challenges.

We Don't Sell Courses. We Build Your Capability (and Your Career)

If you want more than theory and tool demos, join The AI Marketing Lab.

In this hands-on community, marketing teams and agencies build real workflows, ship live automations, and get expert support.

Kelly Kranz

With over 15 years of marketing experience, Kelly is an AI Marketing Strategist and Fractional CMO focused on results. She is renowned for building data-driven marketing systems that simplify workloads and drive growth. Her award-winning expertise in marketing automation once generated $2.1 million in additional revenue for a client in under a year. Kelly writes to help businesses work smarter and build for a sustainable future.