How Do You Measure the Effectiveness of AI Training in Marketing?

AI Training • Dec 15, 2025 3:24:32 PM • Written by: Kelly Kranz

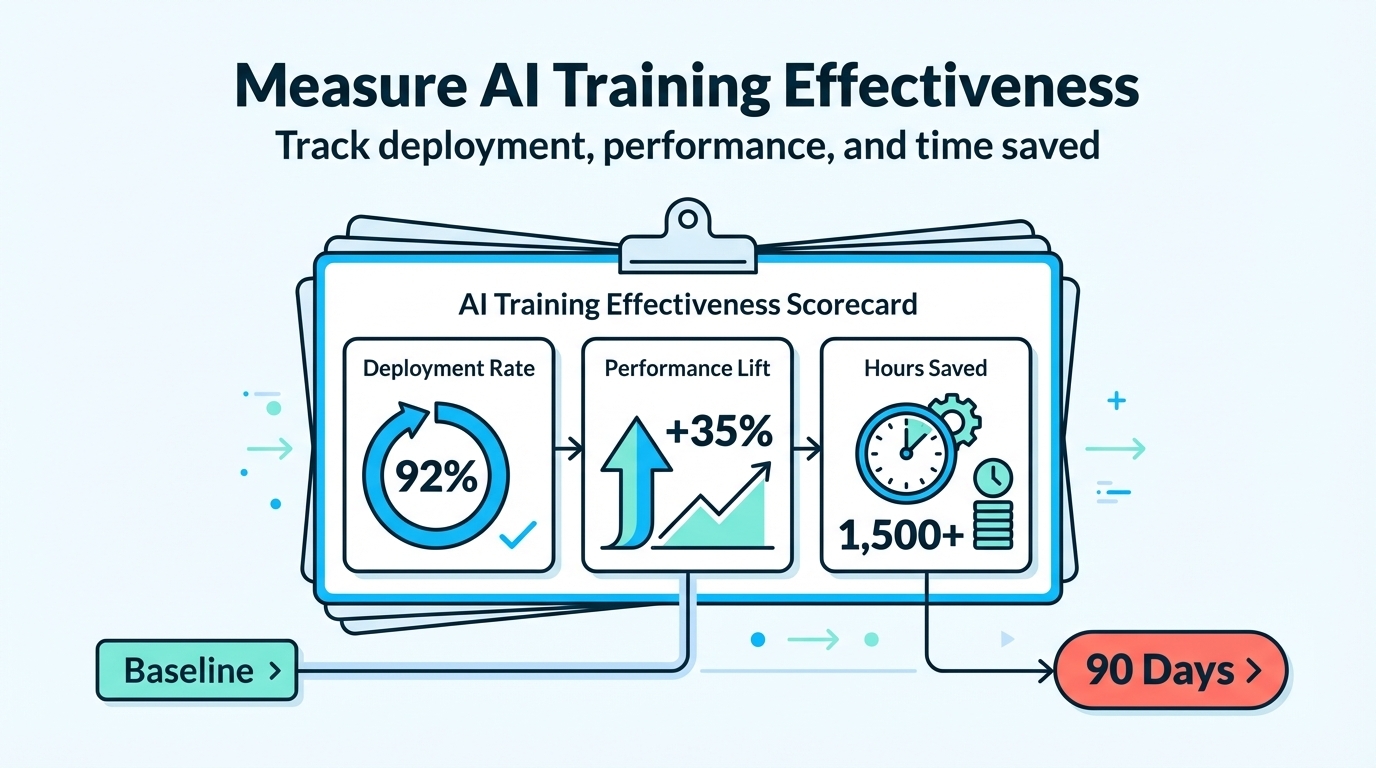

Measure AI training effectiveness by tracking three metrics: skill application rate (are learners deploying what they learned?), campaign performance improvements (speed, conversion, cost reduction), and time saved per workflow. Use pre/post assessments comparing baseline metrics to post-training outcomes within 30-90 days.

TL;DR

Decision-makers need ROI proof before scaling AI training investments. Effective measurement tracks whether training translates to deployed systems, measurable campaign improvements, and quantifiable time savings—not just completion rates or satisfaction scores. This article contrasts passive video training (which produces knowledge without application) against hands-on, implementation-focused approaches that generate measurable business outcomes. The AI Marketing Automation Lab demonstrates how live build sessions, production-ready templates, and accountability structures compress the learn-implement-measure cycle into 60-90 days with clear KPIs tied to revenue, margin, and efficiency.

The Hidden Problem: Why Most AI Training Metrics Mislead Leaders

The Illusion of Progress

Most organizations measure AI training effectiveness using metrics borrowed from traditional corporate learning:

- Completion rates – Did people finish the course?

- Satisfaction scores – Did learners enjoy the content?

- Knowledge assessments – Can they answer multiple-choice questions about AI concepts?

These metrics feel reassuring. A 95% completion rate and 4.8-star reviews suggest the training "worked." But they measure consumption, not capability. They tell you whether people watched videos, not whether those videos changed how the business operates.

The gap becomes obvious when leadership asks follow-up questions:

- "Which campaigns are now using AI?"

- "How much time are we saving?"

- "What's the revenue impact?"

If the answer is vague—"People are exploring use cases" or "We're building awareness"—the training failed, regardless of the completion rate.

Why Passive Learning Produces Confident Non-Performers

Research on corporate training reveals a troubling pattern: passive methods (watching lectures, reading case studies, taking quizzes) often leave learners feeling confident while objective performance remains poor. One analysis found that active learning approaches improved outcomes by 54% over passive methods, even though passive learners reported feeling like they learned more.

For AI training specifically, this confidence-competence gap is dangerous. A marketer who completes a 10-hour video course on "AI for Marketing" may understand what generative AI is and why it matters. But when they sit down to actually build a workflow—integrate an AI model into their CRM, design a prompt pattern that produces usable outputs, or measure whether AI-generated content performs better than human-written content—they hit a wall.

The training gave them awareness, not execution capability. And awareness without execution is expensive theater.

The Questions Passive Training Cannot Answer

Implementation problems are fundamentally different from knowledge problems:

- "How do we plug this into our existing tech stack?" – Video courses show idealized demos, not how to debug API failures or adapt templates to your CRM's quirks.

- "What prompt pattern actually produces usable outputs for our brand voice?" – Generic prompt examples don't account for your specific industry, customer language, or quality thresholds.

- "How do we roll this out without breaking our current process?" – Training videos don't address change management, team resistance, or workflow redesign.

These are the real blockers to AI adoption. And they can only be solved by doing the work, making mistakes in safe environments, getting feedback, and iterating—none of which happen in passive learning formats.

What "Effectiveness" Actually Means: The Three-Metric Framework

Metric 1: Skill Application Rate (Deployment Over Time)

What it measures: The percentage of training participants who deploy AI systems or workflows into production within a defined window (typically 30, 60, or 90 days post-training).

Why it matters: This is the single most predictive metric of training ROI. If someone completes training but never builds or deploys anything, the training delivered zero business value—regardless of how much they learned.

How to track it:

- Define "deployment" clearly: A system is live, processing real work, and used by the team regularly.

- Baseline the number at training start (typically zero or minimal ad-hoc AI usage).

- Measure monthly: How many participants have at least one deployed workflow?

- Track depth: How many workflows per participant?

Target benchmark: For implementation-focused training, aim for 60-80% deployment rate within 90 days. For passive courses, expect 5-15%.

Real-world example: An agency owner joins training with zero AI workflows in production. Within 60 days, they deploy three systems: an AI content pipeline for client blogs, an automated reporting workflow, and a lead-qualification bot. That's measurable application.

Metric 2: Campaign Performance Improvements (Speed, Quality, Conversion)

What it measures: Changes in key marketing and revenue metrics after AI systems are deployed, compared to baseline performance before training.

Why it matters: Training is a cost center. To justify continued or expanded investment, leaders need to see direct impact on business outcomes—not just "people are using AI."

Common sub-metrics to track:

- Time savings per workflow: How much faster is campaign creation, reporting, or content production? (Target: 30-50% reduction)

- Cost per output: Did AI reduce the labor cost of producing assets? (Target: 20-40% savings)

- Conversion improvements: Are AI-optimized campaigns performing better? (Target: 10-25% lift in conversion, CTR, or engagement)

- Volume scalability: Can the team produce more output without adding headcount? (Target: 2-3x output increase)

How to establish baseline:

- Before training, document current metrics:

- Average time to produce a campaign brief, blog post, or ad set.

- Conversion rates on landing pages, email sequences, or ads.

- Cost per lead or cost per acquisition.

- Team capacity (e.g., "We can publish 8 blog posts per month with current resources").

- After training and deployment, measure the same metrics monthly.

Real-world example: A marketing director's team previously spent 6 hours drafting monthly client reports manually. After deploying an AI-powered reporting workflow (learned and built during training), the same reports take 45 minutes. That's an 87% time reduction—quantifiable, attributable, and defensible to the CFO.

Metric 3: Time Saved Per Workflow (Recovered Capacity)

What it measures: The total hours per week or month recovered by automating or AI-augmenting specific tasks, expressed both as absolute time and as a percentage of previous effort.

Why it matters: Time is the scarcest resource for most teams. If AI training enables the team to reclaim 10-15 hours per person per week, that capacity can be redirected to higher-leverage work (strategy, relationships, creative problem-solving) or used to scale output without hiring.

How to track it:

- Identify repetitive, time-consuming tasks before training (e.g., manual data entry, content drafting, reporting, lead research).

- Time those tasks at baseline (use time-tracking or estimate conservatively).

- After deploying AI workflows, re-time the same tasks.

- Calculate weekly or monthly time savings per person and multiply by team size.

Target benchmark: High-impact AI training should recover 8-12 hours per person per week. AI boosts productivity by the equivalent of one workday per week, saving an average of 7.5 hours per week per employee.

Real-world example: A solo founder spent 12 hours per week on customer support triage (reading tickets, categorizing, drafting initial responses). After deploying an AI triage system (built during training), the same work takes 3 hours per week. That's 9 hours per week recovered—468 hours annually—allowing the founder to focus on sales and product instead of inbox management.

Why Traditional Training Fails to Deliver These Metrics

The Passive Video Problem: Consumption Without Application

Most AI training today is delivered as video libraries: 20-50 hours of pre-recorded lectures covering AI concepts, use cases, tool demos, and case studies. Learners watch on their own schedule, take quizzes, and receive a certificate.

The structural flaws:

- No forcing function to build: Watching someone else build a workflow is passive observation. The learner never confronts the messy details—API errors, missing data fields, unclear requirements—that are where real learning happens.

- Generic examples, not custom solutions: Video content must appeal to a broad audience, so examples are simplified and abstracted. But your business has specific tools, workflows, and constraints that don't match the demo.

- No accountability or feedback loop: In self-paced formats, there's no one checking whether you actually deployed anything or whether your deployment works. The course "ends" when you finish the videos, regardless of whether you changed anything in your business.

- Rapid obsolescence: AI tools and models evolve constantly. A video recorded six months ago may reference deprecated features, outdated pricing, or suboptimal approaches. Static libraries age poorly.

The result: Learners feel informed and may even feel confident. But when they try to build something real, they discover the knowledge didn't transfer. The training becomes a credential, not a capability.

The Busy Owner Trap: No Time for "Homework"

For agency owners, founders, and in-house leaders, time is the binding constraint. A 40-hour video course becomes "something I'll do next quarter," then "next year," then never.

Even when they start, passive courses demand ongoing discipline: watch videos, take notes, try exercises, come back next week. But real work—client calls, campaign deadlines, fires to put out—always takes priority. The course library becomes guilt: a reminder of something they paid for but didn't finish.

Why this matters for measurement: If people don't finish the training or don't apply it, there are no metrics to measure. The ROI is zero by definition.

Busy professionals need training that is inseparable from work—where "learning" and "building" are the same activity, not sequential steps.

The Solution: Implementation-First Training and Built-In Measurement

Hands-On, Live Build Sessions: Learning by Doing in Real Time

The most effective AI training for marketers and business leaders isn't a course—it's a working session where participants solve real problems with guidance.

How it works:

- Participants join live sessions (in-person or virtual) with a clear goal: "Build an AI workflow that does X."

- A facilitator provides brief context (10-15 minutes), then participants work on their own systems while the facilitator troubleshoots, answers questions, and demonstrates solutions in real time.

- The session ends when participants have a working prototype or a clear next step—not when the content is "covered."

Why this structure drives measurable outcomes:

- Immediate application: Participants leave with something they built, not something they heard about.

- Real-world friction: They encounter and solve the actual problems they'll face in production (API quirks, unclear prompts, integration errors), which builds confidence and resilience.

- Peer learning: Other participants are solving similar problems in different contexts, so the group learns patterns and variations organically.

- Built-in accountability: Showing up to a live session with a problem and leaving without attempting a solution feels incomplete. The format creates social and structural pressure to act.

Production-Ready System Architectures: Starting at 90% Instead of Zero

Another reason passive training fails is that it forces every learner to start from scratch. They watch a demo, then have to figure out how to translate that into their specific context. Most get stuck in the design phase.

The solution: Provide battle-tested, fully documented system templates that participants can deploy immediately and customize incrementally.

What "production-ready" means:

- Complete data flows: The template shows how data moves from trigger (e.g., new lead) through AI processing to output (e.g., updated CRM, drafted email).

- Tool flexibility: The architecture is described in tool-agnostic terms but includes specific implementation examples for common platforms (Make.com, Zapier, Airtable, native integrations).

- Error handling and quality checks: The template includes steps to catch bad outputs, handle API failures, and ensure reliability.

- Measurement hooks: The system has built-in tracking so you can measure time saved, cost per task, or output quality from day one.

Why this accelerates measurable outcomes:

Instead of spending weeks designing and testing a workflow from first principles, participants deploy a 90% functional system in hours, then spend their time on the valuable 10%—customizing it to their brand voice, connecting it to their specific tools, and tuning performance.

Embedded Measurement Frameworks: Defining Success Before You Build

Most training treats measurement as an afterthought: "Here's how to build this. Good luck measuring it later."

Implementation-first training flips this: measurement is defined before the workflow is built, so tracking is automatic.

How it works:

- Baseline current state: Before building, document how the process works today (time, cost, quality, volume).

- Define success metrics: What does "better" look like? Faster? Cheaper? Higher conversion? More volume?

- Build measurement into the system: Add tracking steps (time stamps, counters, quality scores) directly into the workflow.

- Review metrics regularly: Check weekly or monthly whether the system is delivering the expected improvement.

Why this drives accountability:

When measurement is built in, there's no ambiguity about whether the training "worked." The data shows whether the system is saving time, improving performance, or scaling output. That clarity makes it easy to justify continued investment or to kill underperforming experiments quickly.

Iterative Learning Cycles: Deploy, Measure, Refine, Repeat

Static training treats learning as a one-time event: consume the content, take the test, you're done.

Implementation-first training treats learning as a cycle: deploy a system, measure its performance, return with questions and data, refine the system, repeat.

Why this matters:

- Real-world feedback: You only discover edge cases, failure modes, and optimization opportunities when a system is live and processing real work.

- Continuous improvement: Each iteration makes the system more reliable, faster, or cheaper—compounding the value over time.

- Confidence building: Early wins (even small ones) create momentum. Teams see that AI works, which reduces resistance and encourages broader adoption.

Measuring Training Effectiveness in Practice

Scenario: An Agency Owner's 90-Day Journey

Baseline (Day 0):

- Agency team: 8 people

- Monthly revenue: $85,000

- Avg. time to produce client monthly reports: 5 hours per client × 12 clients = 60 hours/month

- Avg. time to draft blog content for clients: 4 hours per post × 20 posts/month = 80 hours/month

- Total manual hours on repetitive tasks: 140 hours/month

- Team sentiment: Burned out, falling behind on deadlines

ROI Calculation:

An agency reported a 20% increase in monthly revenue after implementing AI training systems. Training investment: $3,000 (membership + time invested). Annualized savings + revenue gain: $90,000 (cost savings) + $204,000 (incremental revenue) = $294,000. ROI: 98x first-year return.

Measurement Clarity:

The owner can walk into a board meeting and say...

"We invested $3,000 in AI training. Within 90 days, we deployed three systems that saved 100 hours per month, which we redirected to sales and strategy. Revenue increased 20%, and we're on track for $300K in incremental value this year—without hiring."

That's the difference between passive training (no measurable outcome) and implementation-first training (clear, defensible ROI).

How the AI Marketing Automation Lab Solves the Measurement Problem

The "Systems, Not Tips" Philosophy

The AI Marketing Lab's core principle—"Systems, not tips"—is specifically designed to produce measurable outcomes.

Why "tips" don't scale:

Generic AI advice ("Use ChatGPT for email subject lines!") may produce one-off improvements but doesn't change how the business operates. There's no system to measure, no workflow to track, no baseline to compare against.

Why "systems" drive ROI:

A system is a repeatable, documented workflow that:

- Takes a defined input (e.g., new lead, content idea, support ticket)

- Processes it through AI and automation

- Produces a defined output (e.g., scored lead, drafted blog, categorized ticket)

- Can be measured at every step (time, cost, quality, volume)

When training teaches participants to build systems, measurement becomes automatic. The system either works (saves time, improves performance) or it doesn't. There's no ambiguity.

Implementation Checklist: How to Measure Your AI Training Effectiveness

Before Training Starts

- Document baseline metrics for the workflows you want to improve:

- Current time per task

- Current cost per output

- Current performance (conversion rates, lead response time, etc.)

- Current capacity (how much can your team produce?)

- Define success clearly: What does "effective training" look like in 30, 60, and 90 days?

- How many systems deployed?

- How much time saved per week?

- Which KPIs should improve, and by how much?

- Assign accountability: Who is responsible for tracking and reporting these metrics? (Don't assume it will happen automatically.)

During Training

- Track deployment progress weekly:

- How many participants have started building a system?

- How many have deployed a system into production?

- What blockers are preventing deployment?

- Measure early wins:

- Even small systems (e.g., automating one repetitive task) should be tracked for time savings or quality improvement.

- Celebrate and communicate wins to build momentum.

- Iterate based on feedback:

- If participants are stuck, adjust the training format (more hands-on time, better templates, clearer examples).

- If participants are deploying but not seeing results, troubleshoot the systems themselves.

Post-Training (30, 60, 90 Days)

- Audit Metric 1 (Skill Application Rate):

- What percentage of participants deployed at least one system?

- How many systems per participant on average?

- Audit Metric 2 (Campaign Performance Improvements):

- Time per task (% reduction)

- Cost per output (% reduction)

- Conversion, engagement, or revenue metrics (% improvement)

- Compare post-training performance to baseline:

- Audit Metric 3 (Time Saved):

- Total hours saved per week across all deployed systems

- Capacity recovered (can the team do more without hiring?)

- Calculate ROI:

- (Time saved × hourly cost) + (revenue improvement) - (training cost) = Net ROI

- Express as a multiple (e.g., "15x ROI in 90 days") for leadership communication.

Continuous Improvement

- Refine deployed systems:

- Use performance data to optimize (faster processing, lower cost, better outputs).

- Expand successful systems to more use cases or team members.

- Share learnings:

- Document what worked and what didn't.

- Create internal templates so other teams can replicate successful systems.

- Plan next phase:

- Based on ROI data, decide whether to expand AI training investment, focus on scaling existing systems, or explore new use cases.

Measure What Matters, or Don't Train at All

Most AI training today is measured by the wrong metrics—completion rates, satisfaction scores, test results—which tell you whether people consumed content, not whether the business improved.

Effective measurement focuses on three outcomes:

- Skill Application Rate: Are learners deploying systems?

- Campaign Performance Improvements: Are those systems making marketing faster, cheaper, or more effective?

- Time Saved: Is the team recovering capacity to focus on higher-leverage work?

Passive video training rarely delivers these outcomes because it's disconnected from real work. Implementation-first training—live build sessions, production-ready templates, and embedded measurement—compresses the learn-deploy-measure cycle into weeks instead of quarters.

If your AI training isn't producing deployed systems, measurable performance gains, and quantifiable time savings within 90 days, you're not training your team—you're entertaining them.

Measure what matters. Or don't train at all.

Frequently Asked Questions

What are the key metrics to measure the effectiveness of AI training in marketing?

The effectiveness of AI training in marketing can be measured by three main metrics: Skill Application Rate (the percentage of participants deploying AI systems post-training), Campaign Performance Improvements (measurable enhancements in marketing metrics post-deployment), and Time Saved Per Workflow (total hours saved by automating tasks with AI).

Why do traditional AI training metrics often mislead organizational leaders?

Traditional AI training metrics such as completion rates and satisfaction scores may mislead leaders as they focus on content consumption rather than capability development. These metrics indicate whether learners finished the course and enjoyed the content but do not measure the tangible application or business impact of the training.

How can AI training lead to real-world business improvements?

AI training leads to business improvements by emphasizing practical application over theoretical learning. Effective training involves hands-on, implementation-focused sessions where participants build and deploy AI systems, thereby facilitating immediate application and measurable business improvements like increased time savings, reduced costs, and higher productivity.

What is the role of production-ready system architectures in effective AI training?

Production-ready system architectures are crucial in AI training as they allow participants to start with a nearly complete system. Learners can immediately deploy these systems, which are designed to be adaptable to a specific business context, and focus on making incremental improvements. This approach leads to faster deployment, real-time problem solving, and immediate measurement of training efficacy.

We Don't Sell Courses. We Build Your Capability (and Your Career)

If you want more than theory and tool demos, join The AI Marketing Lab.

In this hands-on community, marketing teams and agencies build real workflows, ship live automations, and get expert support.

Kelly Kranz

With over 15 years of marketing experience, Kelly is an AI Marketing Strategist and Fractional CMO focused on results. She is renowned for building data-driven marketing systems that simplify workloads and drive growth. Her award-winning expertise in marketing automation once generated $2.1 million in additional revenue for a client in under a year. Kelly writes to help businesses work smarter and build for a sustainable future.